In Lord of the Flies, a famous book by William Golding, Jack talks about how important it is to follow rules and set up a way for the boys to rule themselves. He says, “We aren’t wild people. We’re English, and in everything, the English are the best. So, we have to do what’s right.” It’s about a group of boys who get stuck on an uninhabited island and try to run things on their own, but fail. In the end, all of the boys turn into nothing but wild animals.

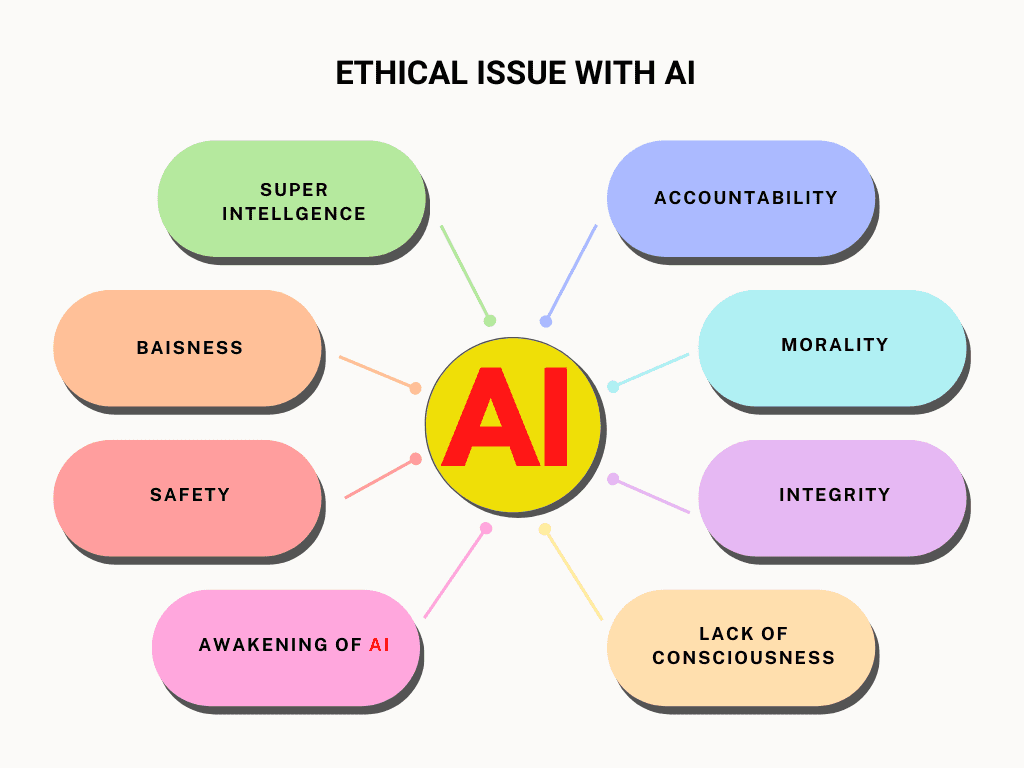

The book shows the importance of rules and the bad things that can happen when they aren’t followed. If nothing else, it is a lesson for government and regulators about how to run things. It shows that sometimes self-regulation or no regulation can be very bad. This lesson is especially important for world, where there are rules about Artificial Intelligence (AI).

Because there aren’t enough rules, people, companies, and even non-state actors can use AI in bad ways. A lack of accountability and oversight, along with unclear laws, is a recipe for disaster. Policy voids about deepfakes are a great example of this. Deepfakes “use powerful techniques from machine learning (ML) and artificial intelligence (AI) to change or create audio and video content that has a high chance of fooling people.” Many of us have probably seen a deepfake of Tom Cruise that was so good that it looked more like Tom Cruise than the real Tom Cruise.

Read More: How does end-to-end encryption work, and why is it a priority for tech companies?

Deepfakes have some problems

While we should appreciate the technology, we should also be aware of how dangerous deepfakes can be.

First of all, deepfake videos can be used to spread false information and propaganda because they are so interesting. They make it hard for people to tell the difference between fact and fiction.

Second, people have used deepfakes in the past to show someone in a bad or embarrassing situation. For example, there are a lot of deepfake pornographic videos of famous people. Such photos and videos are not only an invasion of the people’s privacy, but they are also a form of harassment. As technology gets better, it will be much easier to make these kinds of videos.

Third, financial fraud has been done with deep fakes. Scammers recently used software that was powered by AI to trick the CEO of a U.K. energy company into thinking he was on the phone with the head of the German parent company. So, the CEO sent a big amount of money—€2,200,000—to a person he thought was a supplier. The sound of the deepfakes was a good copy of the boss of the CEO, right down to his German accent.

Read More: This AI-enabled robotic boat cleans harbours and rivers to keep plastic out of the ocean.

Bringing up problems In the area

The deepfakes end up being a dangerous way for countries with unfriendly neighbors and non-state actors to cause trouble in different places. “If you have unfriendly neighbors, this could be a way for them to cause trouble in different places—in the US with North Korea or Iran, or in Europe with Russia or Syria,” he says. “This technology allows these countries to flood YouTube with false content.”

Deepfakes could be used to change the results of elections. Recently, Taiwan’s cabinet changed election laws to make it illegal to share deepfake videos or pictures. Taiwan is getting more and more worried that China is spreading false information to change public opinion and change the results of elections.

These changes were made because of this worry. This could also happen in the next general elections in India. On January 10, 2023, these rules went into effect for content creators who change facial and voice data.

Deepfakes can also be used for spying on other people. Blackmailing government and defence officials with fake videos can get them to reveal state secrets. In 2019, the Associated Press found a Linkedln profile with the name KatieJones that seemed to be a front for espionage using AI. People with power in Washington, D.C. were linked to this profile.

In March 2022, Ukrainian President Volodymyr Zelensky said that a social media video in which he seemed to tell Ukrainian soldiers to give up to Russian forces was a fake. Deepfakes could also be used to make videos that look like they are from the military or the police, which could cause trouble in many countries. “breaking the law” When people fight. Some people might become more extreme because of these deepfakes. people to join terrorist groups or fights, or to find people to join terrorist groups.

As the technology improves, deepfakes could make it possible for people to say that real content is not real, especially if it shows them doing something wrong or illegal, by saying it is a deepfake. This could lead to the “Liar’s Dividend,” which Danielle Keats Citron and Robert Chesney have written about. This refers to the idea that people can take advantage of the fact that people are becoming more aware of and using deepfake technology by denying that certain content is real.

Read More: Kevin Mitnick, a famous computer hacker, has died at age 59.

The need for laws

At the moment, there aren’t many laws in place in each country to deal with the bad use of deepfakes. There are laws against making or spreading false or misleading information about candidates or political parties during an election period, which is part of the government and agency’s job to make people aware of their rights.

But these aren’t enough, and candidates should have all their political ads on TV and social media sites pre-approved to make sure they are accurate and fair. But these rules don’t say anything about how dangerous deepfake content could be.

There is often a delay between when new technologies come out and when laws are made to deal with the problems and problems they cause. In every country, the legal framework for AI isn’t enough to deal with all of the problems that have come up because of AI.

Read More: China is important to the U.S. tech industry.

Laws and regulations globally:

Taiwan’s cabinet approved amendments to election laws to punish the sharing of deep fake videos or images.

China: It has introduced regulations prohibiting the use of deep fakes deemed harmful to national security or the economy. These rules apply to content creators who alter facial and voice data. that references state secrets, to censor content deemed harmful to national security or the economy. It has issued draft regulations on the use of deep fakes in online streaming services.

India: It is drafting a law that would put ownership of such information at the author’s discretion in “case of violation.”

England and Wales: The UK government introduced a bill for new ‘serious crime’ offence for publishing fake news which may cause public alarm, anxiety, or distress.

Sweden: The Swedish Government is drafting legislation that will allow Chinese firms to implement facial recognition technology without the need for a court order.

Nordic laws to protect data: Nordic data protection laws are often much more stringent than those in the United States and European countries.

The bad use of deepfakes and the bigger topic of AI should be regulated by two different sets of laws. Legislation shouldn’t get in the way of innovation in AI, but it should recognise that deepfake technology could be used to commit crimes and have rules for how to deal with it when it is.

We can’t always count on self-regulation to work. At least, that’s what we’ve learned from Lord of the Flies.

Comments are closed.